Romay, Romina, Trubert, Jean-François, Lingrand, Diane, and Sassatelli, Lucile. (2021). Machine Learning-Guided Interaction for Gestural Composition of Non-Conventional Sonic Objects. In: Strange Objects: A New Art of Making. October 2021, La Rochelle, France. https://hal.science/hal-03618770 and https://hal.science/hal-03618880 C-ACTN and C-AFF

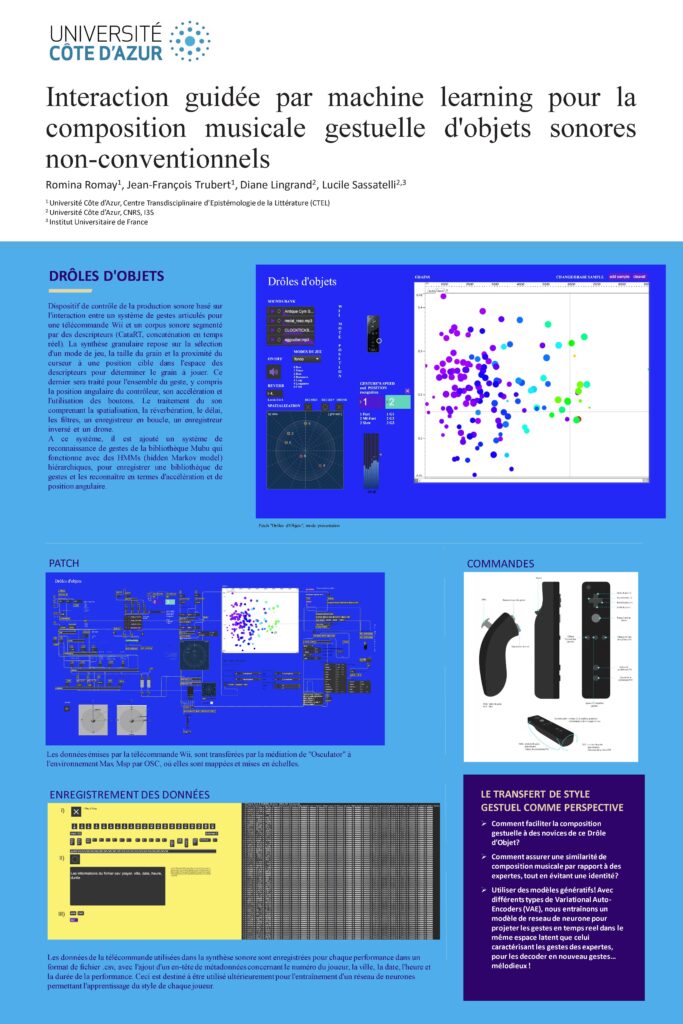

This communication presents a study on the use of machine learning for musical composition, specifically focused on gestural music with non-conventional electro-acoustic sonic objects. The objective is to consider gesture as a key element of composition, allowing interaction not only with traditional instruments but also with electro-acoustic sonic objects and various devices. The proposed method uses a generative gesture model based on a deep artificial neural network.

Paris, François, Giuglaris, Camille, Farhang, Alireza, and Romay, Romina S. (2020). MICROTONES: AN OPEN-SOURCE MODULE FOR SIBELIUS. In: Journées d’Informatique Musicale (JIM) Strasbourg 2020. October 2020, Strasbourg, France. https://hal.science/hal-03321245 ACLN

This article presents “Microtones”, a plugin developed by the CIRM (National Center for Musical Creation in Nice) for the Sibelius music notation software. This plugin is designed to facilitate the writing and audio reproduction of microtonal music, a musical field exploring intervals smaller than the standard half-tone. The new version 3 introduces significant innovations, such as the precision of scales to the nearest midi cent (a unit of measure in microtonal music) and customization of musical notation. The article details the development history of Microtones, its main features, and functionalities. It highlights the importance of this plugin in various artistic contexts, particularly in Western and non-European music, and describes its practical musical applications.

Romay, Romina Soledad. (2022). Creation Of Tools and Materials For Music Composition From Data and Metadata. In: Web Audio Conference 2022 (WAC 2022), Cannes, France. https://doi.org/10.5281/zenodo.6796396 C-AFF

This presentation introduces a model designed to transform data and metadata into tools and materials usable in musical composition. Developed with Python, it is based on a hierarchy of musical language elements established according to the significance of each value in the dataset used for transcoding into music. The use of Python, complemented by the creation and analysis tools of the music21 library score, optimizes data processing and makes the generation of musical materials and composition more concrete and intuitive.